Tiler Update

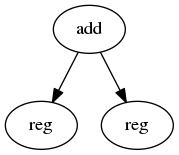

In my last blog I talked a bit about a problem I had with generating all possible sets of rules from the tile 'grammar'. I've since solved that problem, and thought it'd be nice to share my results. (NB: I use the word 'grammar' loosely today and on other days. In compiler jargon a grammar is usually a thing that matches a linear stream of lexical tokens to a tree structure. In this case, it's really the other way around, because it's used to match a tree and output a linear sequence. However, these things are quite similar, so I hope you'll forgive me for using the term). It may help if I state the problem as clearly as I can. The input is a list of rules mapping a head and up to two child nodes to a tile and a nonterminal symbol . A tile, to reiterate, is ultimately a piece of code that emits machine code. The nonterminal symbol (from now on: symbol ) is what takes the place of a node after a tile has been selected for it. This abstraction is...